Attention Implementation

It is better to use row to denote the input dimension and column to denote the channel dimension.

Cross Attention #

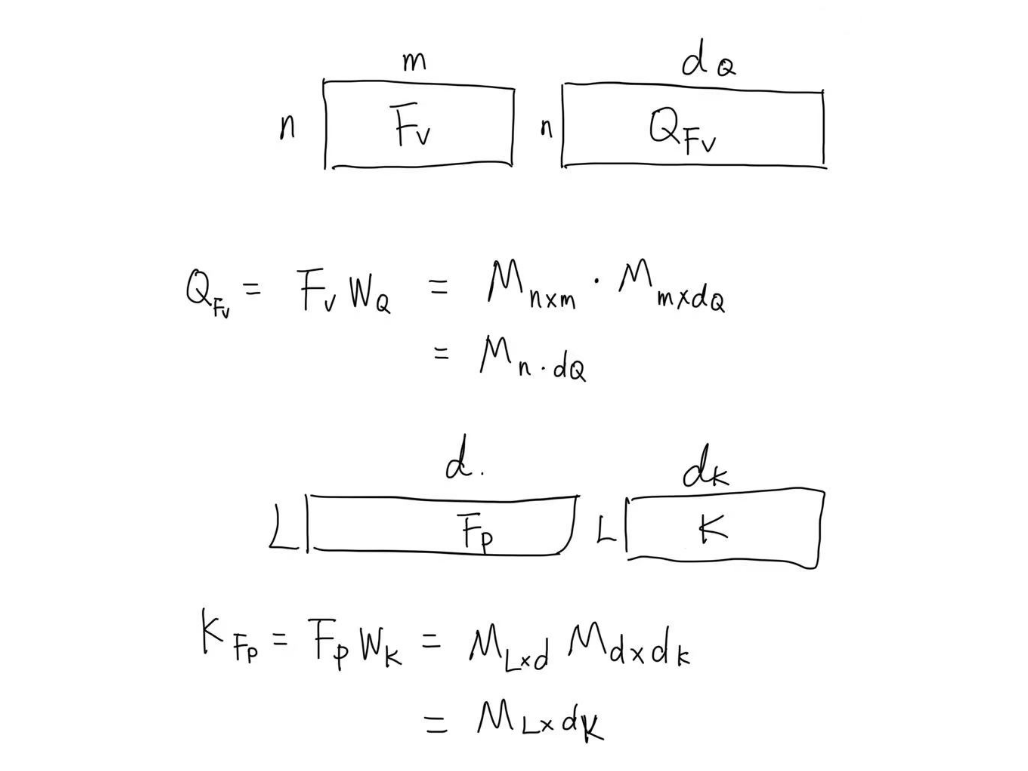

In the example of UNINEXT. We have a Features extracted from visual image and prompt say $F_v$ and $F_p$.

- $F_v$ is of shape $n$, $m$.

- $F_p$ is of shape $L$, $d$.

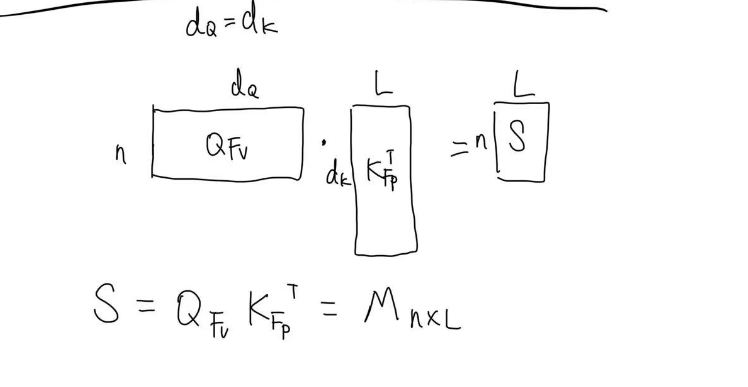

We want to use Cross Attention with to update $F_v$ with $F_p$ by the following formula:

$$

F_v’ = F_v + CrossAttention(F_v, F_p)

$$

here we mean use $F_v$ as query and $F_p$ as key and value.

$$ F_v’ = F_v + CrossAttention(F_v, F_p) $$ Bi-directional Attention 还要反过来 $$ F_p’ = F_p + CrossAttention(F_p, F_v) $$